Clotho: An audio captioning dataset

I’m happy to announce the release of Clotho! A novel audio captioning dataset, consisting of audio samples of 15 to 30 seconds duration, each audio sample having five captions of eight to 20 words length. There is a total of 4981 audio samples in Clotho, with 24 905 captions (i.e. 4981 audio samples * 5 captions per each sample). Clotho is built with focus on audio content and caption diversity, and the splits of the data are not hampering the training or evaluation of methods. All audio samples are from the Freesound platform, and captions are crowdsourced using Amazon Mechanical Turk and annotators from English speaking countries. Unique words, named entities, and speech transcription are removed with post-processing.

Clotho is freely available at Zenodo platform (link).

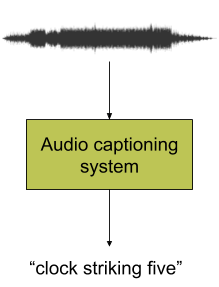

If you are not familiar with audio captioning, it is the task of general audio content description using free text. It is an intermodal translation task (not speech-to-text), where a system accepts as an input an audio signal and outputs the textual description (i.e. the caption) of that signal. Audio captioning methods can model concepts (e.g. "muffled sound"), physical properties of objects and environment (e.g. "the sound of a big car", "people talking in a small and empty room"), and high level knowledge ("a clock rings three times"). This modeling can be used in various applications, ranging from automatic content description to intelligent and content oriented machine-to-machine interaction. An example of audio captioning system is at the following image.

Clotho has a total of 4365 unique words and is divided in three splits: development, evaluation, and testing. Development and evaluation splits are publicly available, and testing split is withheld for purposes of scientific challenges. One audio sample in Clotho appears only in one split. Also, in Clotho there is not a word that appears only in one split. Additionally, all words appear proportionally between splits (the word distribution is kept similar across splits), i.e. 60% in the development, 20% in the evaluation, and 20% in the testing split.

Words that could not be divided using the above scheme of 60-20-20 (e.g. words that appear only two times in the all three splits combined), appear at least one time in the development split and at least one time to one of the other two splits. More detailed info can be found on the paper presenting Clotho, available online at arXiv (link) or in this web site, at the publications tab (link).

Audio samples

Clotho audio data are extracted from an initial set of 12 000 audio files collected from Freesound platform. The 12k audio files have durations ranging from 10s to 300s, no spelling errors in the first sentence of the description on Freesound, good quality (44.1kHz and 16-bit), and no tags on Freesound indicating sound effects, music or speech. Before extraction, all 12k files were normalized and the preceding and trailing silences were trimmed.

The content of audio samples in Clotho greatly varies, ranging from ambiance in a forest (e.g. water flowing over some rocks), animal sounds (e.g. goats bleating), and crowd yelling or murmuring, to machines and engines operating (e.g. inside a factory) or revving (e.g. cars, motorbikes), and devices functioning (e.g. container with contents moving, doors opening/closing). For a thorough description how the audio samples are selected and filtered, you can check the paper that presents Clotho dataset, available online at arXiv (link) or in this web site, at the publications tab (link).

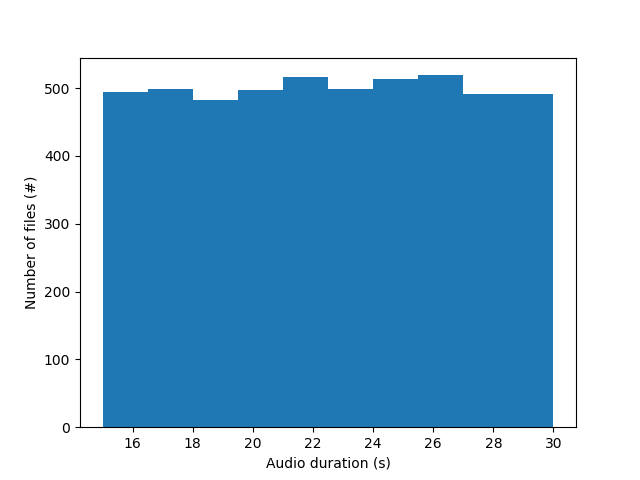

In the following figure is the distribution of the duration of audio files in Clotho.

Captions

The captions in the Clotho dataset range from 8 to 20 words in length, and were gathered by employing the crowdsourcing platform Amazon Mechanical Turk and a three-step framework. The three steps are:

- audio description,

- description editing, and

- description scoring.

In step 1, five initial captions were gathered for each audio clip from distinct annotators. In step 2, these initial captions were edited to fix grammatical errors. Grammatically correct captions were instead rephrased, in order to acquire diverse captions for the same audio clip. In step 3, the initial and edited captions were scored based on accuracy, i.e. how well the caption describes the audio clip, and fluency, i.e. the English fluency in the caption itself. The initial and edited captions were scored by three distinct annotators. The scores were then summed together and the captions were sorted by the total accuracy score first, total fluency score second. The top five captions, after sorting, were selected as the final captions of the audio clip. More information about the caption scoring (e.g. scoring values, scoring threshold, etc.) is at the corresponding paper of the three-step framework.

We then manually sanitized the final captions of the dataset by removing apostrophes, making compound words consistent, removing phrases describing the content of speech, and replacing named entities. We used in-house annotators to replace transcribed speech in the captions. If the resulting caption were under 8 words, we attempt to find captions in the lower-scored captions (i.e. that were not selected in step 3) that still have decent scores for accuracy and fluency. If there were no such captions or these captions could not be rephrased to 8 words or less, the audio file was removed from the dataset entirely. The same in-house annotators were used to also replace unique words that only appeared in the captions of one audio clip. Since audio clips are not shared between splits, if there are words that appear only in the captions of one audio clip, then these words will appear only in one split. This process yields a total of 4981 audio samples, each having five captions and amounting to a total of 24 905 captions.

A thorough description of the three-step framework can be found at the corresponding paper, available online at arXiv (link) or in this web site, at the publications tab (link).

Who Clotho was: check this link!