I’m happy to share that the very first ever challenge task on automated audio captioning, is over! The task was a part of the DCASE2020 Challenge and received 34 (!) submissions, from 10 teams of 13 different institutions, and over 30 authors. Clotho dataset used in the challenge, and the winning submission managed to achieve the impressive SPIDEr score of 0.22 for Clotho testing split.

In this blog post, I will present the challenge and outline some elements elements of the submissions. Stay tuned for the upcoming paper (which will published only at arXiv), analysing the outcomes and systems of the audio captioning task.

What is audio captioning (instead of an intro)

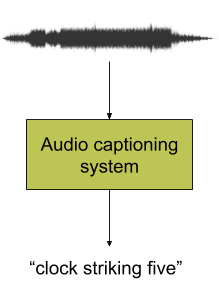

For those not familiar with, automated audio captioning is the task of general audio content description using free text. It is an intermodal translation task (not speech-to-text), where a system accepts as an input an audio signal and outputs the textual description (i.e. the caption) of that signal. Audio captioning methods can model concepts (e.g. "muffled sound"), physical properties of objects and environment (e.g. "the sound of a big car", "people talking in a small and empty room"), and high level knowledge ("a clock rings three times"). This modelling can be used in various applications, ranging from automatic content description to intelligent and content oriented machine-to-machine interaction.

Figure 1. Illustration of automated audio captioning system and process.

Task target and dataset

The very first ever audio captioning task, was hosted at DCASE2020 Challenge (as task 6). For the (automated) audio captioning task, the participants had to employ the freely available audio captioning dataset, Clotho, and develop methods that were getting audio as an input and produced a textual description of the input audio. To do that, the participants had access to the two freely available splits of Clotho, the development and evaluation splits. The development split of Clotho contains 2893 audio clips with five captions each, amounting to a total of 14465 audio-and-caption pairs. The evaluation split contains 1045 audio clips with (again) five captions each, amounting to a total of 5225 audio-and-caption pairs.

The performance of the methods submitted to the audio captioning task, was assessed using six machine translation metrics and three captioning metrics. The six transaction metrics are

1. BLEU1

2. BLEU2

3. BLEU3

4. BLEU4

5. ROUGEL

6. METEOR

And the three captioning metrics are

1. CIDEr

2. SPICE

3. SPIDEr

In a nutshell, BLEUn measures a modified precision of n-grams (e.g. BLEU2 for 2-grams), METEOR measures a harmonic mean-based score of the precision and recall for unigrams, and ROUGEL is a longest common subsequence-based score.

CIDEr measures a weighted cosine similarity of n-grams, SPICE measures the ability of the predicted captions to recover from the ground truth captions, objects, attributes, and the relationship between them, and SPIDEr is a weighted mean between CIDEr and SPICE, exploiting the advantages of both metrics.

Details and ranking of submissions

All submissions were based on deep neural networks, involving methods based on an encoder that processed the audio, a decoder that got the output of the encoder and produced the caption, and an alignment mechanism between the encoder and the decoder. The median value of the reported total amount of parameters is 10 000 000 (10 millions), with a maximum of 140 M and a minimum of 2 M.

4 of the submissions used a transformer as an encoder, 14 an RNN based encoder, 4 a CRNN encoder, and 13 submissions a CNN based encoder. Though, 27 submissions used an RNN based decoder and six a transformer as a decoder.

The submissions were ranked using two schemes. The first is about teams and the second about systems. For the teams raking, if the same authors had multiple submissions, then only their best ranking (according to SPIDEr) submission was considered. For the systems ranking, all the submissions were ranked according to their SPIDEr score. You can see the ranking of the submissions, according to the different schemes, here for teams ranking and here for systems ranking.

The top five team submissions, with their score and corresponding authors, are:

1. First team submission with SPIDEr of 0.222: Koizumi Yuma, NTT Corporation, Japan

2. Second team submission with SPIDEr of 0.214: Yusong Wu, Beijing University of Posts and Telecommunications, Beijing, China

3. Third team submission with SPIDEr of 0.196: Yuexian Zou, ADSPLAB, School of ECE, Peking University, Shenzhen, China

4. Fourth team submission with SPIDEr of 0.194: Xuenan Xu, MoE Key Lab of Artificial Intelligence SpeechLab, Department of Computer Science and Engineering AI Institute, Shanghai Jiao Tong University, Shanghai, China

5. Fifth team submission with SPIDEr of 0.150: Javier Naranjo-Alcazar, Computer Science Department, Universitat de València, Burjassot, Spain

You can find the technical reports of all submissions at the corresponding page of the audio captioning task.

Stay tuned for the upcoming paper describing the outcomes and systems of there audio captioning task, and the very interesting papers of audio captioning at the DCASE2020 Workshop!